I’ve been looking for AI use cases in B2B SaaS and lately found out one from Pipedream which is really interesting and I think it’s worth to introduce to more B2B companies.

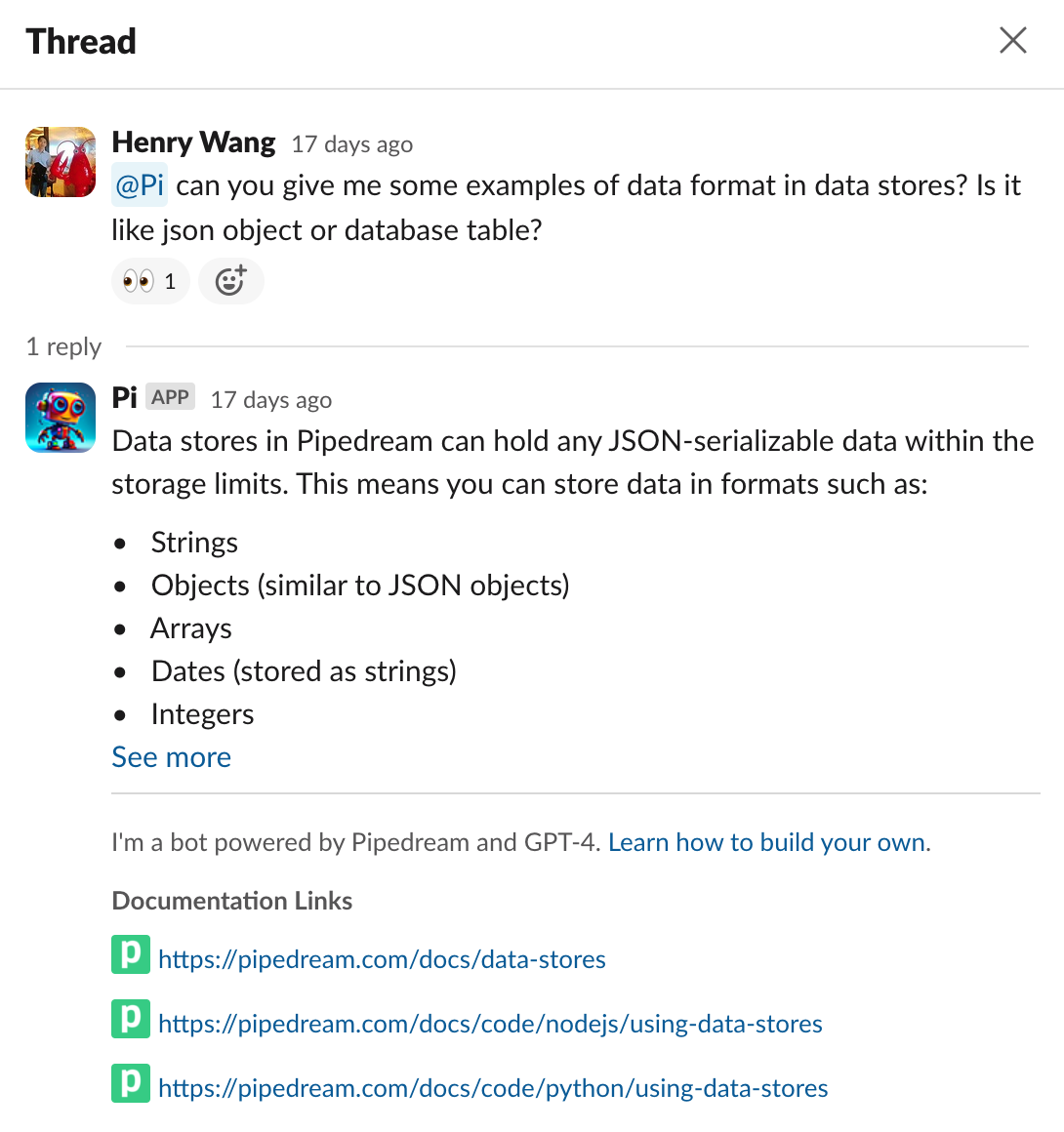

Pipedream is an automation platform for developers and they created this Slack bot for their Slack community. Even since I joined their Slack channel, I’ve seen people chat with Pi every day across all kinds of questions regarding Pipedream. Pi is built on top of Pipedream official documents and thus its answer is specific to their business context compared to generic answers from ChatGPT. It definitely helps a lot for both Pipedream team and the community. With this a great success, Pipedream team even shares a detailed article about how to create your own chat bot.

However, before we start creating a similar bot, it’s more useful to know where we come from and where we’re heading to.

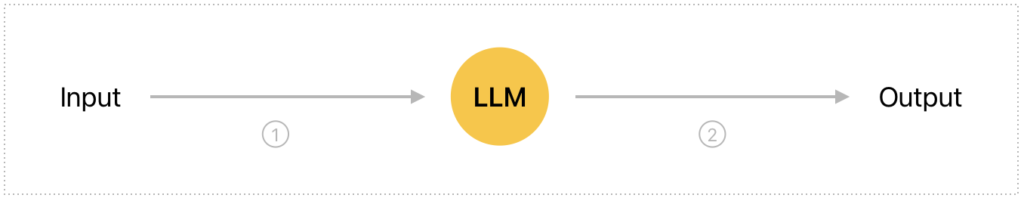

LLM I/O

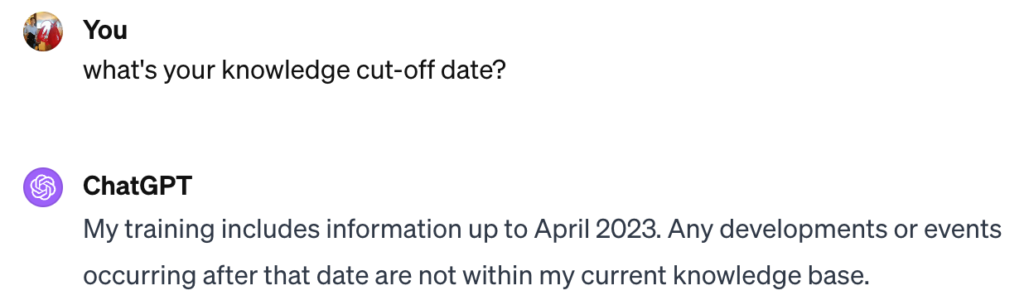

If you are a ChatGPT user (I’m sure you are), you are familiar with what this means: you ask ChatGPT a question, and you get an answer. Simple. But, one of the issues is there is always a knowledge cutoff date:

Plus, these LLMs are not trained specifically for your business so they would never be experts in your domain. Lastly, although feeding LLMs with your own knowledge base can tremendously improve the quality of the answers, in some cases you might want LLMs to have access to your own proprietary data (CRM, support platform, internal tools, etc) and even take actions.

Here come LLM agents.

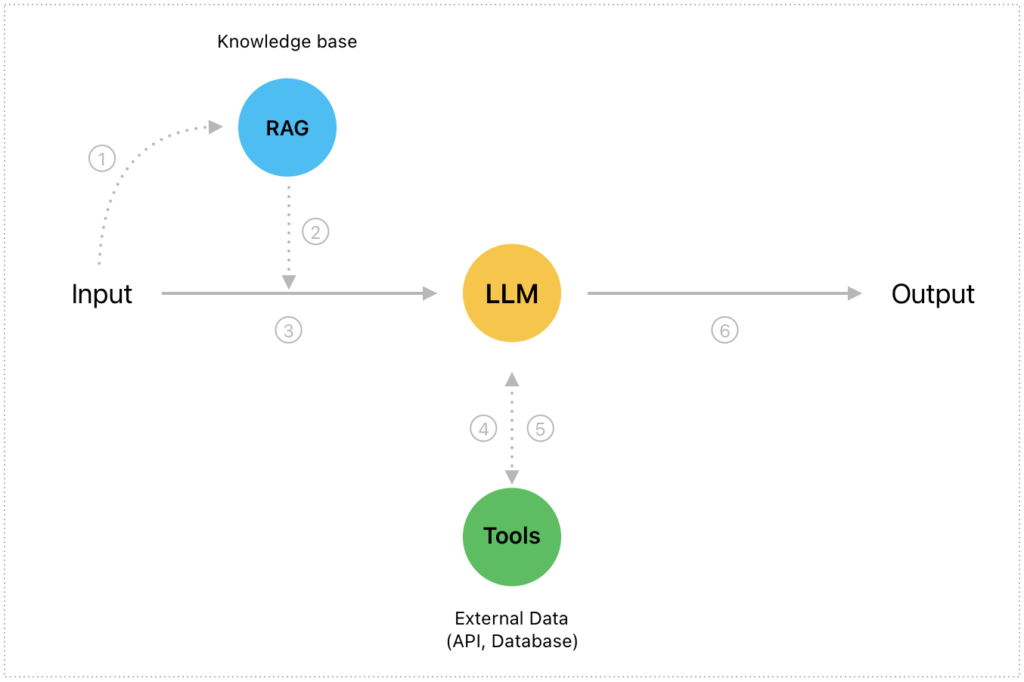

LLM Agents

LLM powered agents can not only have your up-to-date domain knowledge (RAG, Retrieval Augmented Generation) but also take actions (Tools) if needed.

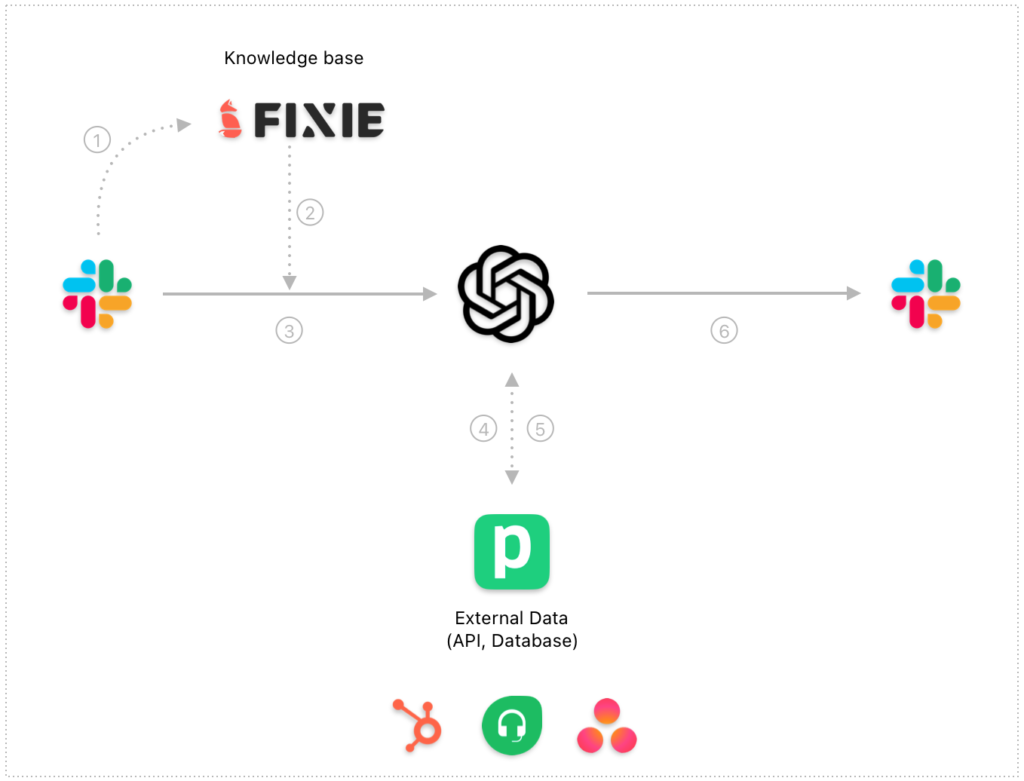

As shown in the diagram, here is what happened within this system:

- Instead of sending user input directly to an LLM, we first send it to our RAG engine which is normally a vector database created based on our knowledge base, such as our websites, use cases, documents etc.

- The RAG engine returns a few matched documents or content relevant to the user input.

- We send the original user input together with matched documents returned from the RAG engine into our LLM.

- LLM decides if more information is needed to better answer the question and if there are some tools or actions available to use. If so, the LLM calls external APIs or databases, such as our CRM and support platform.

- API calls return raw responses

- Our LLM then prepare the final answer to the user based on (1) original user input, (2) matched documents from RAG, (3) responses from external platforms.

LLM Powered Slack Bot

Finally, let’s see how to create a Slack bot with the capabilities of referring to our knowledge base and also calling external data sources to generate better quality answers.

Here are the tech stacks I choose for the example.

UI (Input & Output): Slack is the perfect UI to handle inputs and outputs because first it can save you a lot of time to create your own UI and users are used to the way of communication.

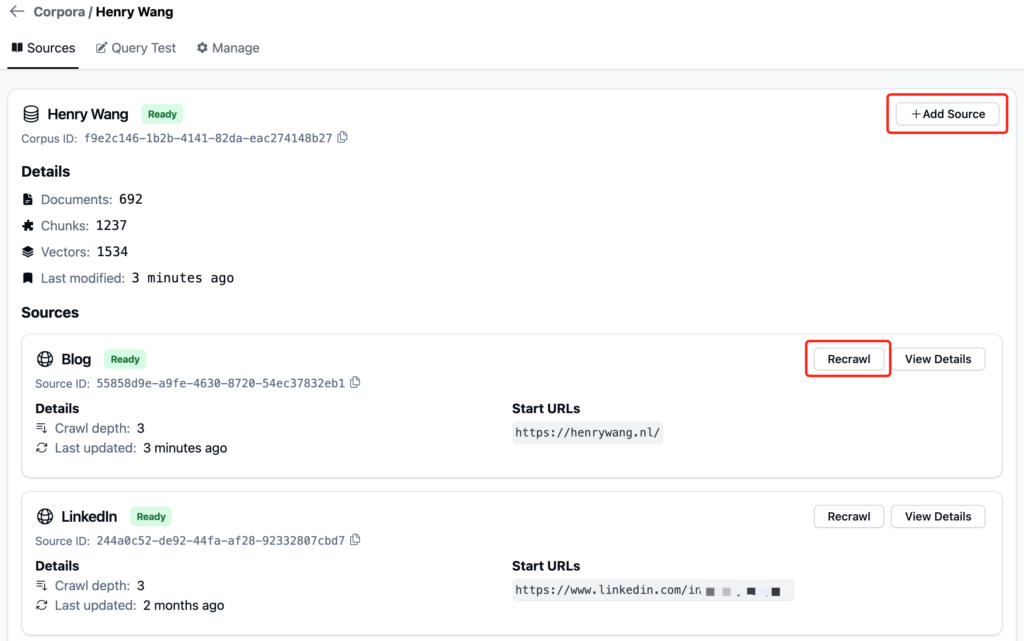

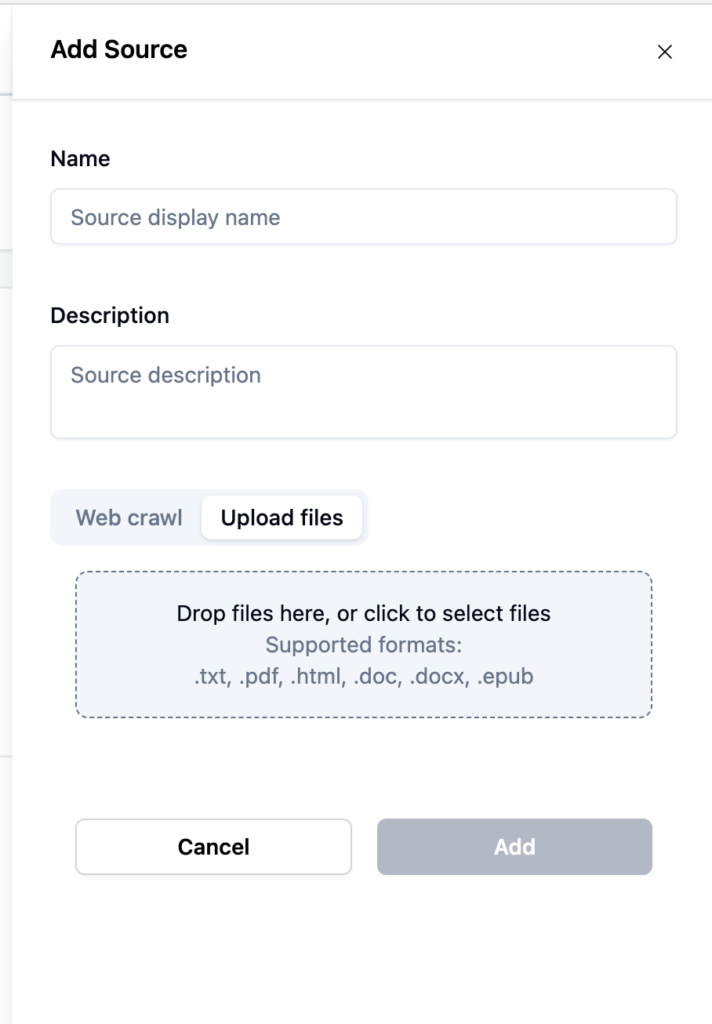

RAG: the RAG engine should meet the following requirements of (1) easy to add and update the knowledge base in different format, such as website pages, pdfs, docs, etc, (2) high quality of similarity search so that the matched documents are relevant to user inputs.

I choose Fixie for this example:

Fixie AI also support managing RAG using APIs so it’s possible to update it in real-time via APIs.

LLM: GPT 4 is currently the most dominant model that supports text and image. GPT also support automated function calls which makes api calls super easy.

Tools: Pipedream has built-in integrations with thousands of apps and it can serve as tools management platform behind the scene so that GPT can talk to external data sources easily and this can significantly shorten development time. Plus, Pipedream takes care of authentication seamlessly and does the heavy lifting here.

In summary, LLM-powered agents are revolutionizing B2B SaaS support by providing highly relevant, context-aware assistance through advanced technologies like RAG and Tools. The combination of Slack for UI, Fixie for knowledge retrieval, and Pipedream for action-taking capabilities harnesses the strength of GPT-4 in creating a responsive and intelligent support system. Looking forward to more use cases in B2B world!